Uses of Acoustic Modeling

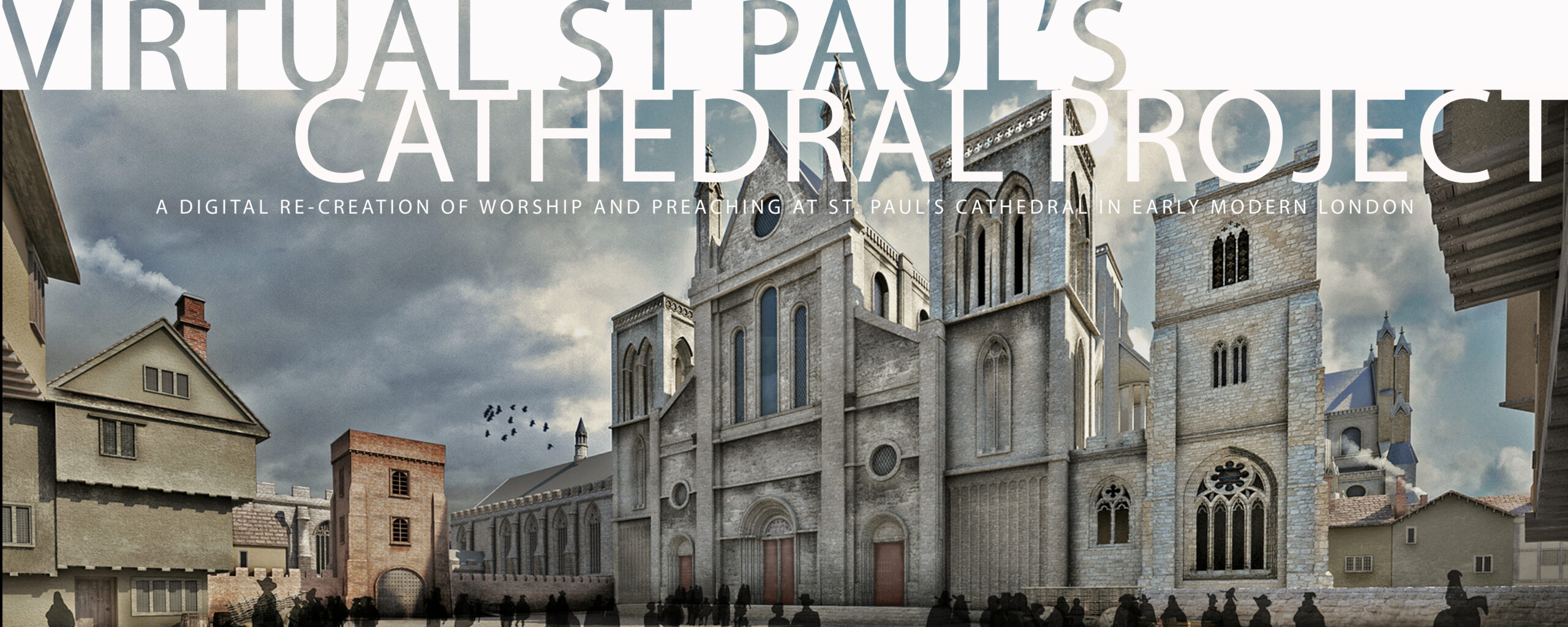

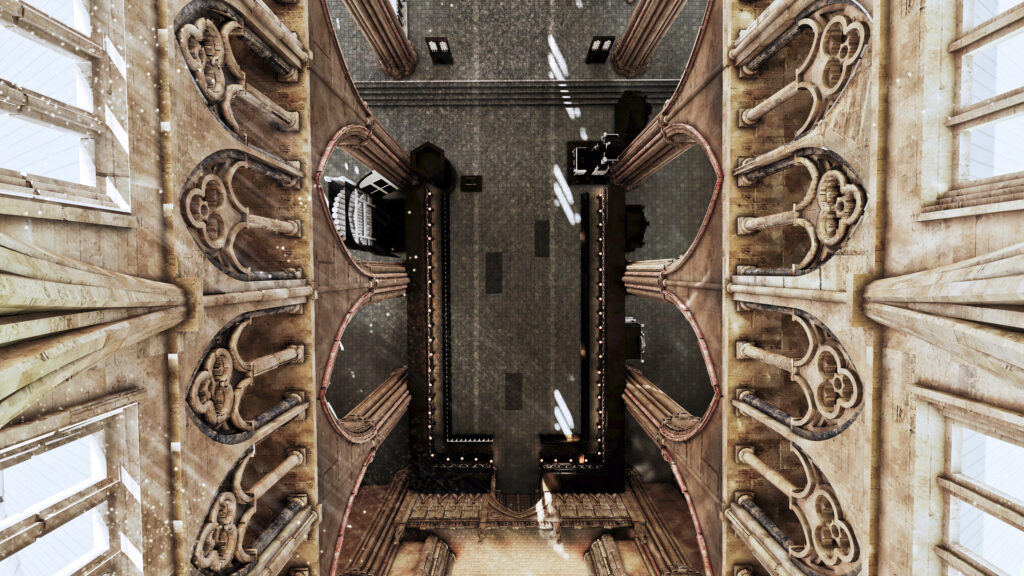

The Virtual St Paul’s Cathedral Project helps us explore public worship and preaching in early modern London, enabling us to experience two full days of worship inside St Paul’s Cathedral as performances, as events unfolding in real time in the space in which they were originally performed and in the context of an interactive and collaborative occasion. To do this, the Project uses architectural modeling software and acoustic simulation software to give us access experientially to these particular events from the past – the services of worship at St Paul’s Cathedral in London on Easter Sunday 1624 and on the Tuesday after the First Sunday in Advent in 1625.

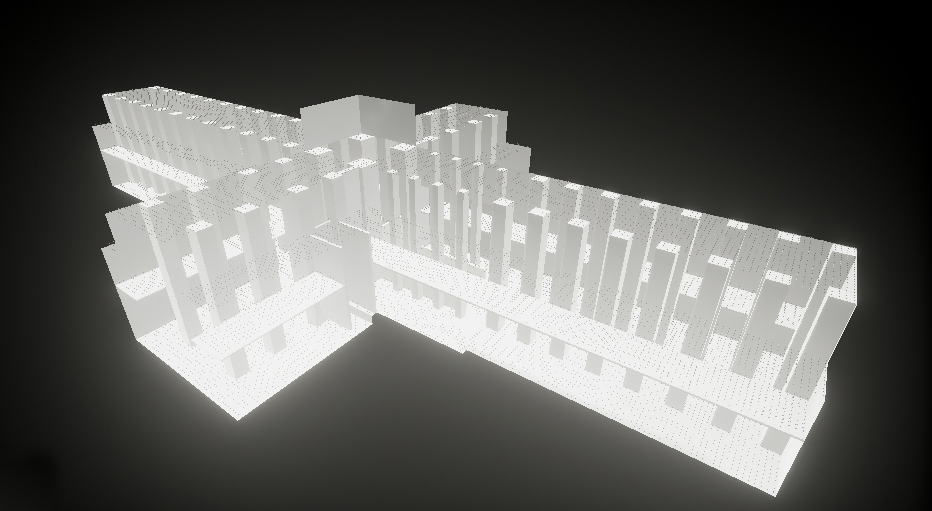

These digital tools are customarily used by architects and designers to anticipate the visual and acoustic properties of spaces that are not yet constructed. Here, they are used to recreate the visual and acoustic properties of spaces that have not existed for hundreds of years. These tools enable us to integrate the physical traces of preFire St Paul’s Cathedral with the surviving visual record of the cathedral and its surroundings to create a visual model of the Cathedral and its churchyard.

These digital modeling tools also enable us to experience historically faithful recreations of worship services conducted in the Cathedral. These services are scripted by the Book of Common Prayer of 1604, implemented by following the directions for use and the rubrics for performance contained in the Prayer Book, together with contemporary descriptions of worship and our own reflections on the spaces available for worship in St Paul’s Cathedral and other sites for worship. We can hear these worship services inside the Acoustic Model of the Choir of St Paul’s Cathedral, a worship space which has not existed since it was destroyed by the Great Fire of London in 1666.

Quick Access to the Acoustic Model

For a quick survey of the changing sound of worship as one moves about St Paul’s Choir, play the video below.

For a deeper immersion in the Acoustic Model and in the worship services recreated for this website, follow the links below.

How Acoustic Modeling Works

Our ability to hear sounds recorded in the present as though we were hearing them in physical locations now inaccessible to us is dependent on two processes. The first is our ability to model electronically the acoustic properties of lost spaces. The second is our ability to create recordings of sounds that include only the emitted sound itself, eliminating or minimizing effects of the properties of the spaces in which the sound was recorded.

Sound, once emitted, behaves in predictable ways. If the space in which the sound is heard is an open space, then the sound attenuates over time at a predictable rate as it moves through space. So, the further the hearer is from the sound source, the quieter the sound relative to the volume of the sound at the moment of emission.

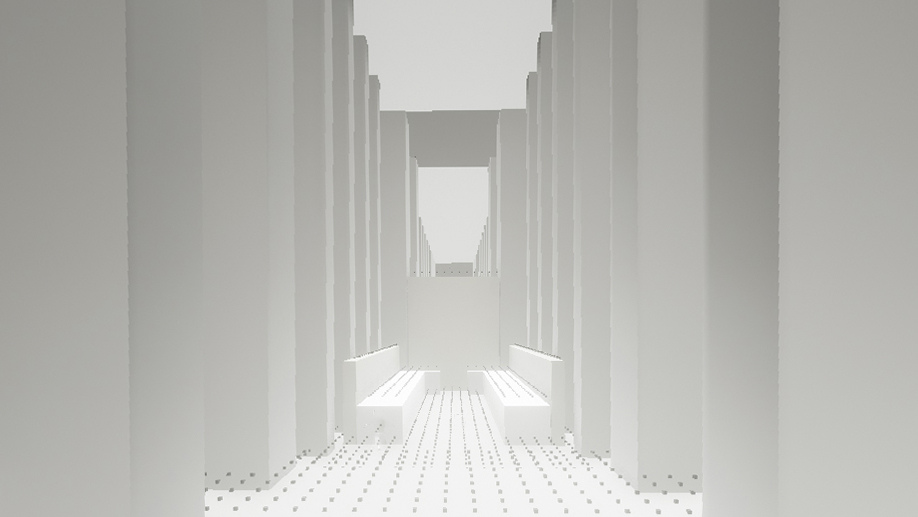

An acoustic model provides the basic geometry of a space, along with the the acoustic properties of the materials used in the construction of that space. Sound, once produced, moves through space, gradually losing energy (loudness) at a known rate as it moves away from the sound source, until it meets the natural or architectural features that shape and confine the space. Then, the geometry of the space and the composition of the surfaces in the space affect the transmission of sound. Sound, when it encounters an object, is either transmitted, reflected, or absorbed to a greater or lesser degree, depending on the size, shape, and physical characteristics of the materials that make up the surface of objects in the path of the sound waves.

Acoustic modeling software creates a digital model of the space in which the sound is to be heard, including both the geometric forms defining the space and the materials out of which the surfaces of the geometrical forms are made. When digital models of sound waves are introduced into the model of the acoustic space, they will behave as they would if this were real sounds introduced into real spaces.

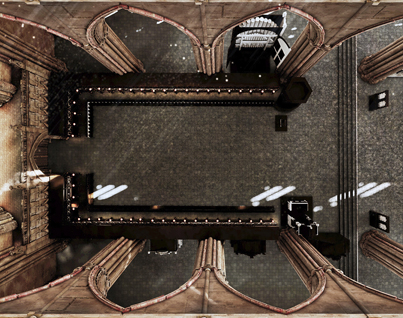

In the case of the Virtual St Paul’s Cathedral Project, we created an acoustic model of the entire interior of the Cathedral, but concentrated our efforts on the Cathedral’s Choir, where worship services were conducted. The Choir — and especially the area of the Choir occupied by the Choir Stalls — made an acoustical unit for us, since it was largely screened off from the Nave by the Choir Screen that separated the Choir from the Nave at ground level, the originating site for most of the sound that was produced inside the Cathedral.

To a lesser extent, the Choir Stalls themselves contributed to the screening off of this area from the rest of the building by shielding the chief sources of sound from the rest of the Choir area. The acoustic model did need to incorporate the aisles on either side of the stalls, as well as the area to the east of the altar, because these spaces were more open to the sound emanating from the area of the stalls and were used as listening areas by people attending worship who arrived too late to get seats in the stall area,.

In this area were clustered the primary sound sources — the organ, the singers, and the clergy who played roles in worship either by reading, praying, or preaching — and the primary areas for people to assemble for worship. The challenge we faced was in recreating the sounds that were created in that space on the two days we had chosen as the heart of this Project. The choices for doing that, the options we had, and the way we proceeded are the subject of discussion in other parts of this website. For now, our concern is with the actual production of those sounds and the way they were recorded.

The great challenge in acoustic recreations is in creating acoustic material that does not bring with it the ambient character of the space in which the sounds were created for recording purposes. We want to make sure that what we hear after we have processed this sound through the acoustic model is that sound as though it were created and heard in the space we are modeling. We need the original sound, sound that does not bring along with it traces of echo or reverberation that would derive from the acoustic properties of the space in which the sound was made.

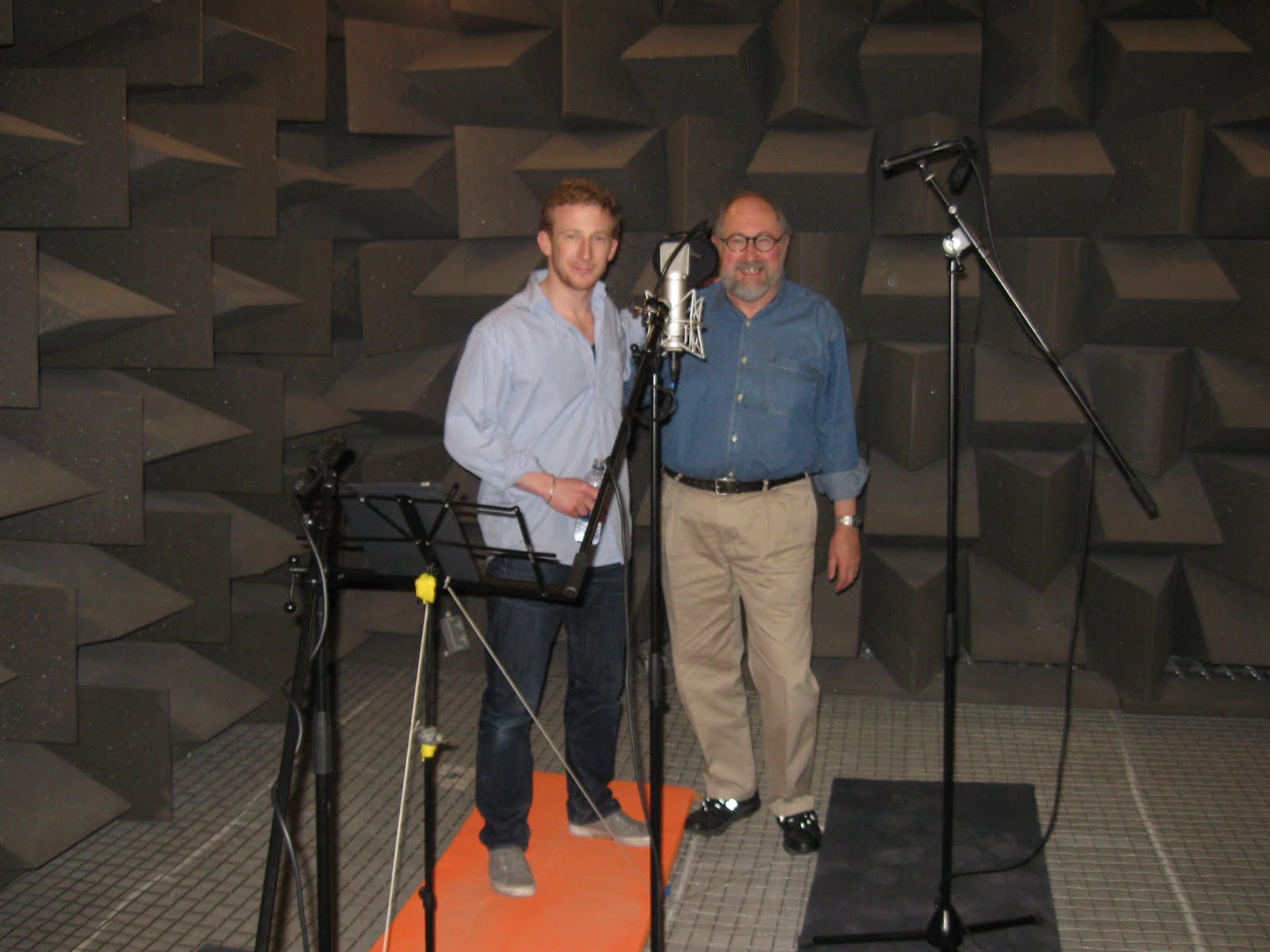

Recordings that meet this standard of purity are called “dry” recordings, as opposed to “wet” recordings, which include both emitted and reflected sound, enabling us to hear the resonances and reverberations of the specific spaces in which the sound was recorded. The driest of dry recordings are made in anechoic chambers, recording studios whose walls and floor are made of thick, highly absorbent materials.

“Anechoic” literally means “without echo”; Ben Crystal’s recording of Donne’s Gunpowder Day sermon for the Virtual Paul’s Cross Project was made in an anechoic chamber at Salford University, in Manchester, England. An anechoic chamber is sphere covered on the inside with irregularly-shaped sound absorbing material; the “floor” is a steel net suspended in the middle of the sphere, so it is transparent to sound and does not therefore produce an “echo.” Sound made inside this chamber is immediately absorbed; the effect on the user is that the sound us uttered and heard, but then disappears.

Anechoic chambers are best used by solo performers. Ensembles can make recordings in anechoic chambers but only by performing the composition over and over, with one member of the ensemble performing in the anechoic chamber at a time. The rest of the ensemble performs in another space; all the performers are linked to each other electronically so they can all still play or sing together. The final recording consists of a compilation of all the performances made in the anechoic chamber.

Given the the fact that the Choir of Jesus College, which stood in for the Choir of St Paul’s Cathedral, consists of 22 members, if we had chosen to use an anechoic chamber for our recordings, each of the compositions performed by the Choir would have had to be recorded at least 22 times. This would have been prohibitively expensive for the budget of the Cathedral Project.

Fortunately, recordings sufficiently dry for use in an auralization project can also be made in a more conventional studio by combining a number of recording equipment and distinctive techniques. These include the use of sound-deadening material on the surfaces of the studio as well as individual highly directional microphones for each sound source. Directional microphones shield out ambient noise and pick up only sounds produced by the source right in front of them, by, for example, a person speaking or singing.

The People

For the Virtual St Paul’s Cathedral Project, this technique was used for all recordings. The bulk of the recordings, including all recordings by the Jesus College Choir as well as by the actors Colin Hurley, David Crystal, and William Sutton were recorded in the West Road Recording Studio in Cambridge University’s Centre for Music and Science. Actors playing the roles of clergy use scripts in the Original Pronunciation of Early Modern London English, prepared for us by the distinguished linguist David Crystal (who also plays the role of Bishop Lancelot Andrewes delivering the Easter Sunday Morning sermon).

The recording engineer Matthew Dilley, of About Sound, organized the recording sessions; the recording engineer Daniel Halford conducted the recordings.

Ben Crystal’s recording of John Donne’s Easter Day sermon and other material were made at the Sans Walk Studio in London. Matthew Dilley was the recording engineer.

The part of the crowd disporting itself in Paul’s Walk (ie the Nave of St Paul’s Cathedral) was played by members of the faculty in linguistics and their graduate students at NC State University, gathered in Post Pro Studio in Raleigh, NC, with technical support provided by Matthew Horton, acoustic engineer.

In Raleigh, the work of organizing the files into usable material was completed by Neal Hutcheson, our colleague in the College of Humanities and Social Sciences and an Emmy Award-winning documentarian and film-maker. The sound of the Virtual St Paul’s Cathedral Project has been the work of mechanical engineer Seth Hollandsworth, a student in computer science and mechanical engineering at NC State University, carried out the auralization process under the supervision of acoustic engineer Dr Yun Jing.

Dr Jing was previously a member of the faculty at NC State University, but is now Associate Professor of Acoustics at Penn State University. Grey Isley (see more above on Grey’s role in this Project) created the Acoustic Model, also under the supervision of Dr Yun Jing.

Seth Hollandsworth also spent a summer working on acoustic modeling as an intern under the supervision of Ben Markham and Matt Azevedo, acoustic engineers with Acentech Incorporated, acoustic consultants, at 33 Moulton Street, in Cambridge, MA. Ben and Matt are the acoustic engineers who handled the acoustic modeling work for our proof-of-concept project, the Virtual Paul’s Cross Project. We are deeply grateful to them for their continued support for our work.